Generative Conditional Modeling for Motion

This project aims to address challenges related to generating realistic and conditioned motions for virtual characters, considering factors like body proportions, environment interactions, and the avoidance of artifacts in motion capture.

Project Status

Ongoing

GTC Team

Dhruv Agrawal, Prof. Robert W. Sumner

Collaborators

Dr. Martin Guay, Dr. Jakob Buhmann, Dr. Dominik Borer, Prof. Dr. Siyu Tang

Motion and environment interactions are a complex set of actions that our brains solve easily. They are influenced by internal factors such as body proportions and shape, external factors such as furniture placement, and social factors such as place or time. We decipher all these conditions and know the course of action. However, encoding the same for a virtual character is much harder due to various reasons. Firstly, these conditions are not easily modeled as metrics to optimize. Furthermore, ambiguity in possible actions means that a recorded motion capture may not be the only feasible or correct motion at the time. Secondly, the set of future actions changes depending on current pose and environment interactions. For example, controlling the center of gravity becomes impossible if the character is in mid-air. Thirdly, modeling all of the joints and muscles in the human body can be very expensive. Hence, only a sparse subset of joints is used to represent human motion. As a result, some of the joints used can be an average of a number of the physical joints. This further adds ambiguity on appropriate joint-limits.

Current methods have attempted using adversarial losses [Aberman et al., 2020, Peng et al., 2021, Peng et al., 2022] and probabilistic modeling [Henter et al., 2020] in order to circumvent the metric definition and ambiguity problem. Environment based interactions have also been studied [Starke et al., 2019, Hassan et al., 2023].

While current state of the art models generate good unconditional motion, imposing conditions on the speed, position or style of the character has significant penalty on motion fidelity. This is compounded by the absence of a large homogeneous motion dataset. Unlike images and text datasets, multiple smaller animation datasets cannot be easily combined due to variations in body proportions. Homogenizing the animations to a uniform skeleton causes artifacts such as foot sliding or even completely changing the final animation.

In this project, we tackle both these problems: conditional modeling and homogenizing datasets. The first two work packages focus on conditional modeling for human poses and motion respectively. Conditioning on a secondary pose, sparse keyframes, style or velocity will be explored. The next project focuses on retargeting between different skeletal hierarchy and proportion. Finally, the last work package introduces alternative input modalities such as images, text or gestures to condition the generated motion.

Work Packages

Neural Inverse Kinematics

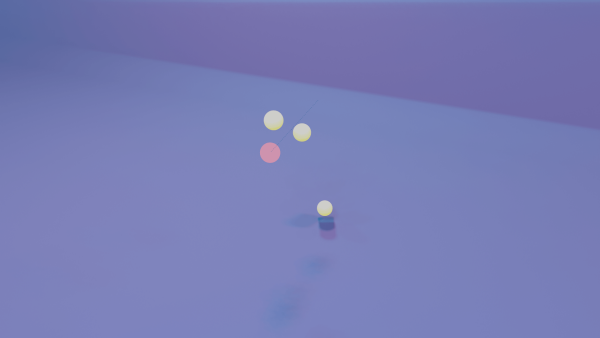

This is the first completed task of recovering full pose given a sparse set of the positional and rotational constraints. For example, the three yellow balls represent positional constraints for both hands and the right foot and the pink ball is the direction the head should be facing. Based on these constraints, we then recover the full pose.

Neural IK reduces the number of control points that artists need to use concurrently and significantly increases the speed for posing a character. By working on singular poses instead of motion sequences, we can focus on satisfying the constraints strongly and generalizing to unseen configurations without simultaneously working on temporal coherence and motion realism.

Publications

coming soon.

Citations

[Aberman et al., 2020] Aberman, K., Weng, Y., Lischinski, D., Cohen-Or, D., and Chen, B. (2020). Unpaired motion style transfer from video to animation. ACM Transactions on Graphics (TOG), 39(4):64–1.

[Hassan et al., 2023] Hassan, M., Guo, Y., Wang, T., Black, M., Fidler, S., and Peng, X. B. (2023). Synthesizing physical character-scene interactions. arXiv preprint arXiv:2302.00883.

[Henter et al., 2020] Henter, G. E., Alexanderson, S., and Beskow, J. (2020). Moglow: Probabilistic and controllable motion synthesis using normalising flows. ACM Transactions on Graphics (TOG), 39(6):1–14.

[Peng et al., 2022] Peng, X. B., Guo, Y., Halper, L., Levine, S., and Fidler, S. (2022). Ase: Large-scale reusable adversarial skill embeddings for physically simulated characters. ACM Transactions On Graphics (TOG), 41(4):1–17.

[Peng et al., 2021] Peng, X. B., Ma, Z., Abbeel, P., Levine, S., and Kanazawa, A. (2021). Amp: Adversarial motion priors for stylized physics-based character control. ACM Transactions on Graphics (ToG), 40(4):1–20.

[Starke et al., 2019] Starke, S., Zhang, H., Komura, T., and Saito, J. (2019). Neural state machine for character-scene interactions. ACM Trans. Graph., 38(6):209–1.