Visual Localisation for AR Architecture Maquettes

Dirk Hüttig

Master's Thesis, March 2021

Supervisors: Dr. Stéphane Magnenat, Dr. Fabio Zünd, Prof. Dr. Bob Sumner

Abstract

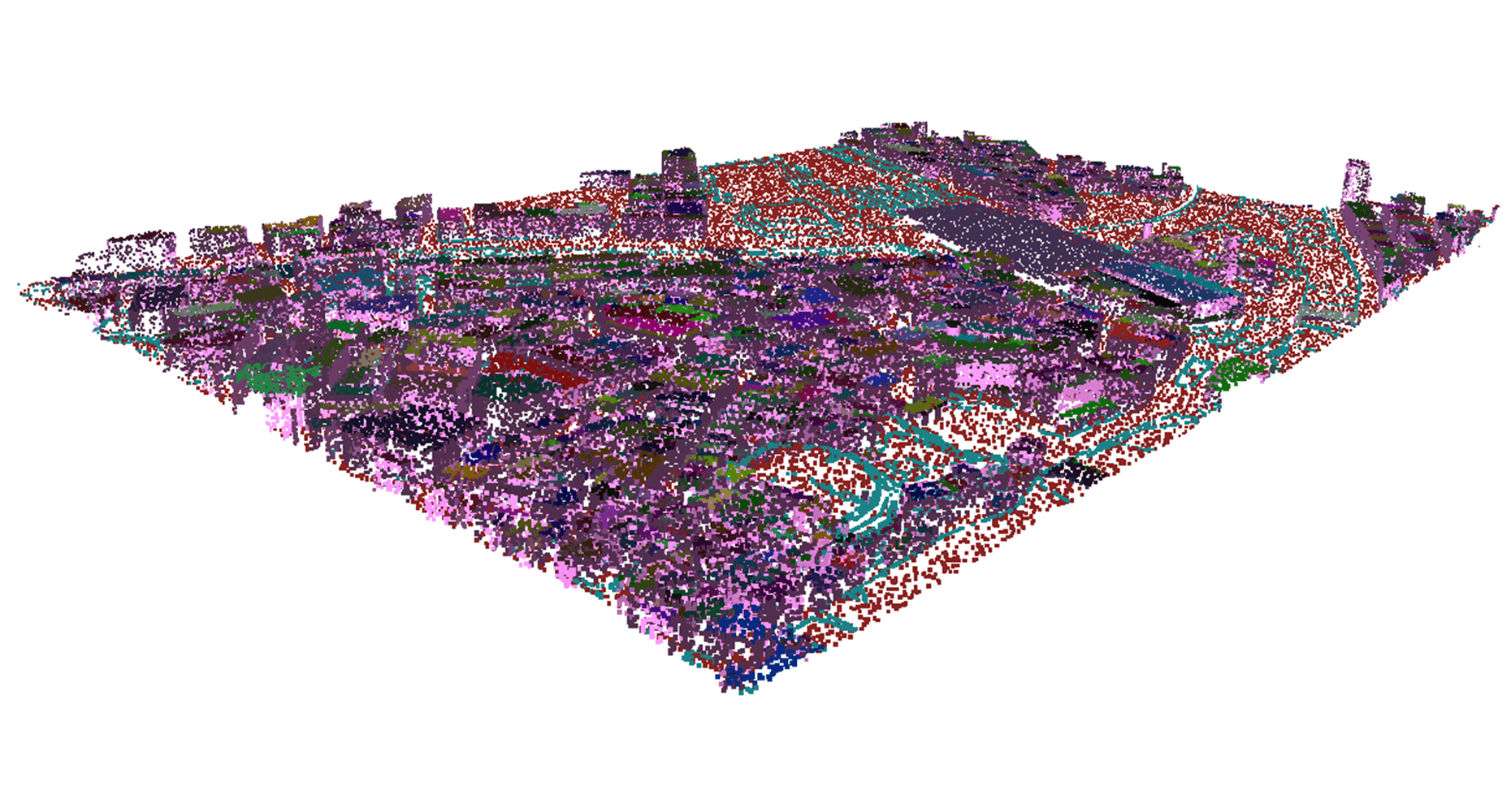

The rise of Augmented Reality (AR) has brought forth numerous applications in different fields in the last years. We identified that previous work on AR for architecture and city planning has been mostly limited to visualising life-size infrastructure in the context of existing spaces. To this end, we explored the possibilities of device localisation on architecture maquettes to be used for AR visualisations. This is particularly challenging as maquettes are small-scale and often uniformly coloured, which makes it hard to detect feature points for visual localisation. The main component of our proposed solution is an iOS application built specifically for the city model of Zurich and the latest iPad with a built-in LiDAR sensor. We use an Iterative Closest Point (ICP) algorithm for registration by aligning a scanned point cloud of the maquette to a previously generated and saved reference point cloud. A C++ library, specifically developed for the city model, allows the generation of reference point clouds and the preprocessing of data for AR visualisations to be used in the iOS application. Further, we developed a test suite to run automated tests on a large number of ICP configurations to find the most suitable configuration. In order to assess the usability of the iOS application we conducted a final user study. Participants showed high interest in the application and saw great potential in further development.