Facial Expression Transfer for AR Paintings

Bastian Morath

Master's Thesis, April 2021

Supervisors: Manuel Braunschweiler, Dr. Fabio Zünd, Prof. Dr. Bob Sumner

Abstract

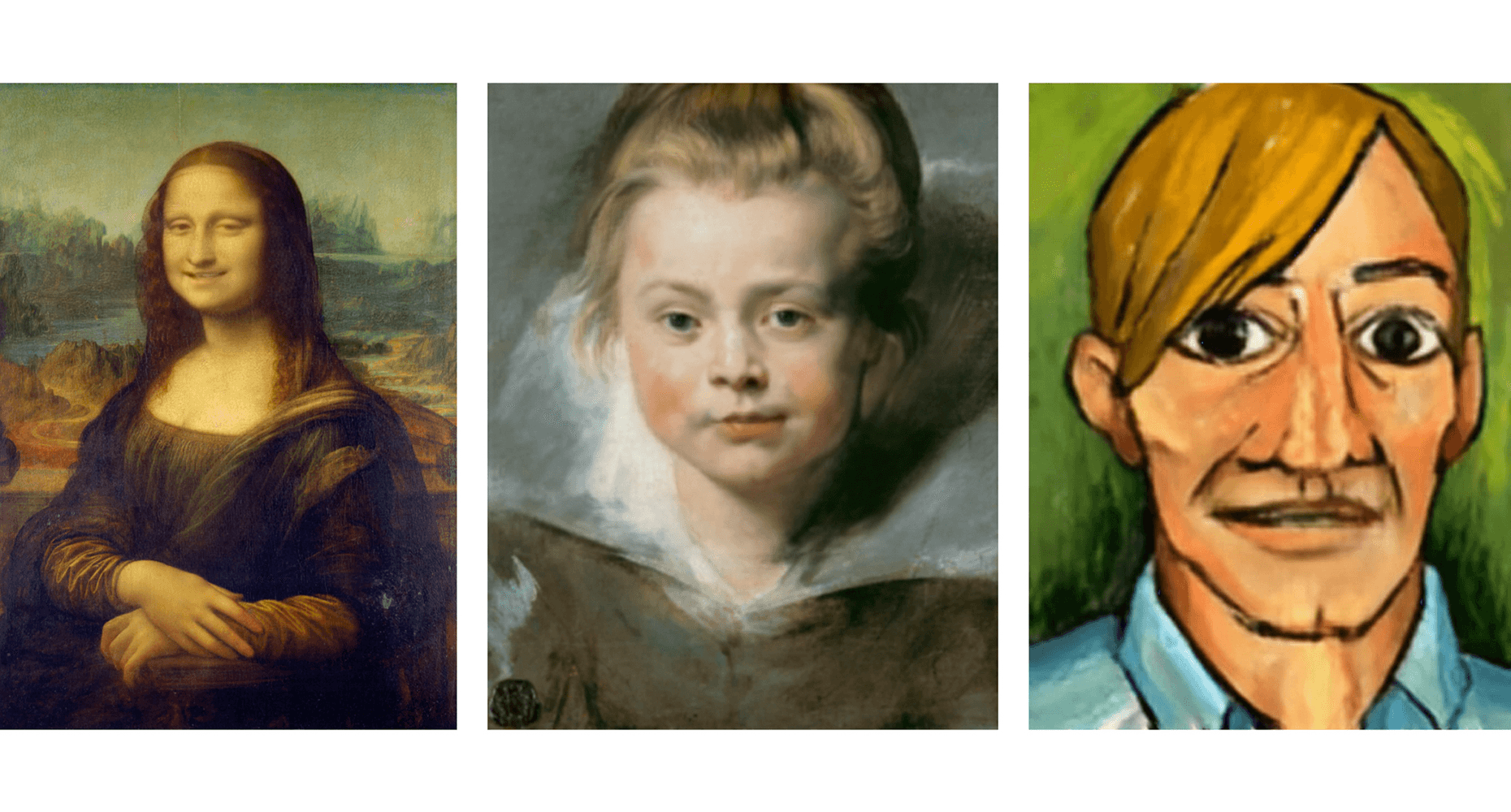

Animating a portrait painting is an intriguing way to enhance a museum visit by making painted expressions palpable. Here, we developed a pipeline that animates any portrait image by a driving video. We first crop both portrait and driving video, thereby removing everything but the head. Next, the two cropped inputs are fed into an existing neural network, which outputs an animated version of the cropped painting. To blend the animation with the original portrait, we propose three different post-processing methods: Alpha blending, which operates on the transparency layer of the animation and original image; dampened optical flow, which gradually aligns the motion at the animated image boundary to the static background image; and background optical flow, which applies the motion of the animation to the background of the painting. A user study, together with our own findings, corroborates our hypothesis that each of the post-processing methods has different strengths: Alpha blending is the fastest method and works well for portraits with a uniform background. It has shortcomings, however, when applied to paintings exhibiting strong lines; Here, the optical flow approaches perform better. The latter aim at directly reducing the motion at the seam between static portrait and animation, but are computationally more expensive. A Unity application, which allows to record driving videos, upload them to a server running the animation pipeline, and download the resulting animation, was further developed and helped test the animation pipeline. Future work could be focused on improving the animation pipeline, especially in cases of large motion in the driving video. Furthermore, the pipeline could be integrated into the existing Behind The Art platform to show the animations in Augmented Reality.