Learning State Value Functions for Accelerating Multi-agent Planning for Emergent Narrative

Patrick Eppensteiner

Bachelor's Thesis, July 2021

Supervisors: Dr. Stéphane Magnenat, Henry Raymond, Prof. Dr. Bob Sumner

Abstract

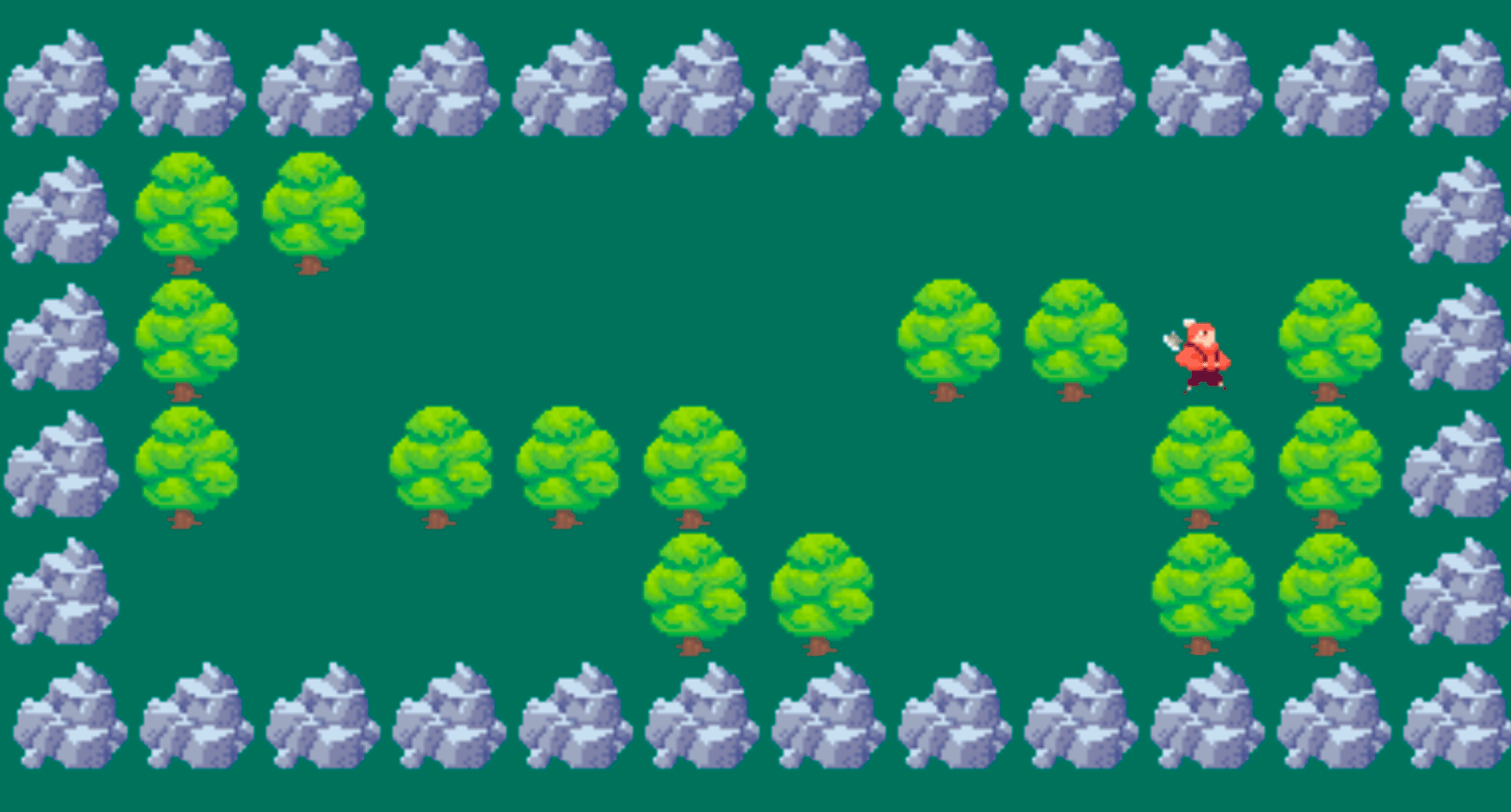

This thesis explores the development and implementation of Machine Learning (ML) methods to learn state value functions and improve the Monte-Carlo Tree Search (MCTS) for the purpose of discovering emergent narrative in agent planning. It builds upon a prior implementation of the MCTS that provides the core system for the creation of a turn-based simulation with planning agents. The core system is modified such that user-defined methods can be easily implemented and used to aid the MCTS. We use the lumberjacks simulation provided in that prior work to develop and test the machine learning methods in a simple environment and show that there are performance gains, both in speed and efficiency. We also discuss the architectures, their results and approaches to potentially further improve the quality of the methods.