Object Classification and Tracking for Augmented Reality Applications

Pascal Maillard

Master's Thesis, April 2022

Supervisors: Dr. Henning Metzmacher, Dr. Fabio Zünd, Prof. Dr. Bob Sumner

Abstract

Augmented reality (AR) offers novel ways to explore and interact directly with the real environment. To reach a wider and also younger audience to engage directly with artworks, they are brought into an interactive AR application.

This thesis aims to develop an approach for the detection and classification of statues as well as an appropriate data acquisition and training pipeline. Moreover, it aims to implement an AR prototype in Unity that demonstrates the capabilities of the chosen approach. The pipeline should cover the whole process from the acquisition of the data to preparing the final training data.

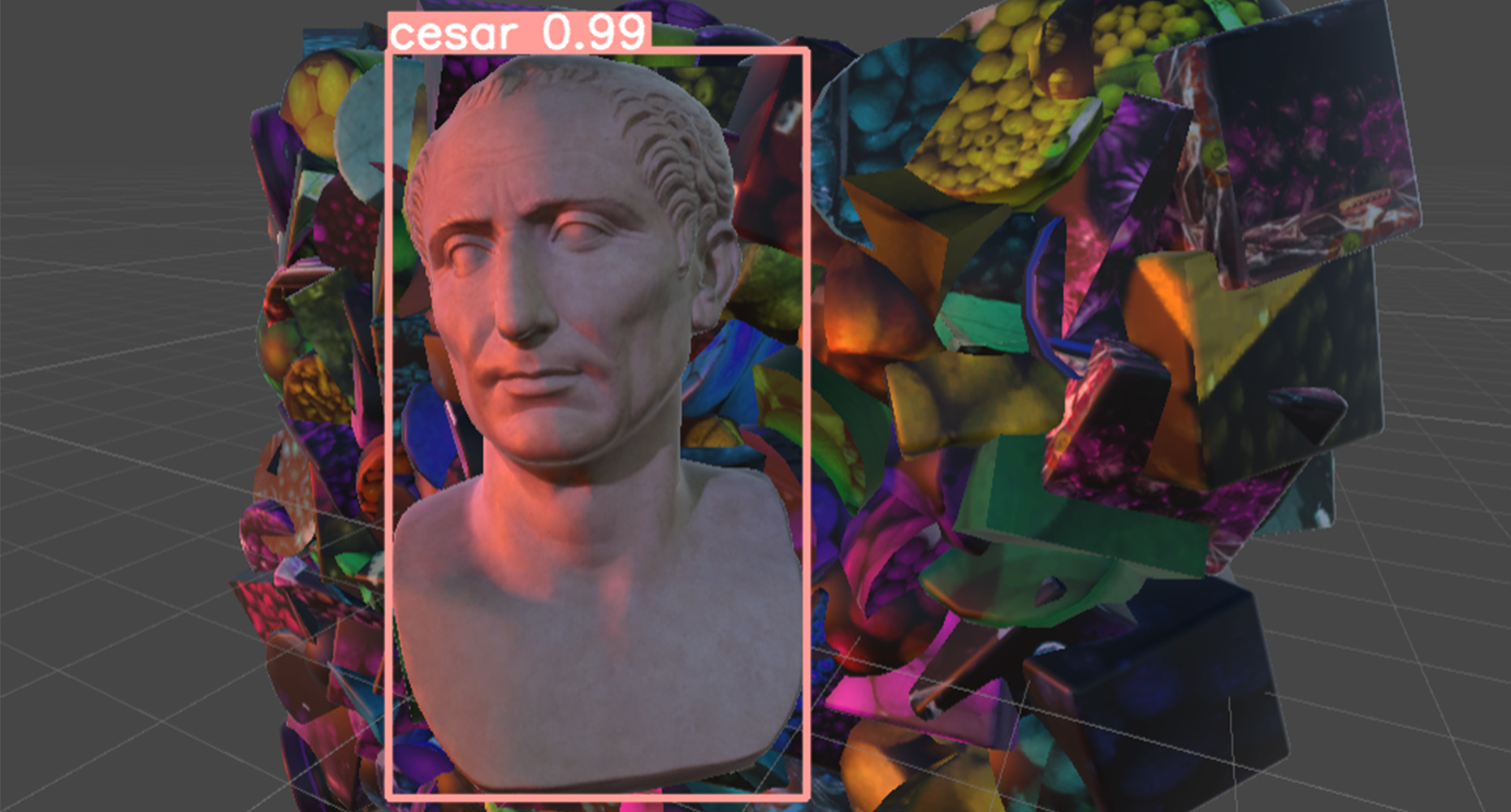

The neural network (NN) model YOLOv5 is used to perform the object detection task of recognising and classifying the statues inside images. The resulting coordinates, together with tracking information, are then used to infer and track the position of the statues in 3D space. Additional constraints of spatial and temporal stability are introduced to make the detection more stable.

The data pipeline consists of automating the image extraction from videos and filtering out blurry images. Photogrammetry is then used to reconstruct a 3D model from the photos. The game engine Unity is used to generate additional synthetic data from the 3D models with varying illumination, orientation and occlusion of the objects.

The results show that the model is able to handle the common challenges of varying illumination and occlusion up to 30% well. The best performing model under heavier occlusion of 40% was YOLOv5n6 in both accuracy and recall with a mAP@0.5 of 0.728.

The developed data pipeline can generate synthetic training data based on recordings of the objects. Videos of 30 seconds or shorter have demonstrated to be sufficient. Our method for localising objects in 3D space based on 2D images has proven to be successful in various examples provided in this work.